Google and Apple have joined forces to issue a common API that will run on their mobile phone operating systems, enabling applications to track people who you come “into contact” with in order to slow the spread of the COVID-19 pandemic. It’s an extremely tall order to do so in a way that is voluntary, respects personal privacy as much as possible, doesn’t rely on potentially vulnerable centralized services, and doesn’t produce so many false positives that the results are either ignored or create a mass panic. And perhaps much more importantly, it’s got to work.

Slowing the Spread

As I write this, the COVID-19 pandemic seems to be just turning the corner from uncontrolled exponential growth to something that’s potentially more manageable, but it’s not clear that we yet see an end in sight. So far, this has required hundreds of millions of people to go into essentially voluntary quarantine. But that’s a blunt tool. In an ideal world, you could stop the disease globally in a couple weeks if you could somehow test everyone and isolate those who have been exposed to the virus. In the real world, truly comprehensive testing is impossible, and figuring out whom to isolate is extraordinarily difficult due to two factors: COVID-19 has a long incubation period during which it is nonetheless transmissible, and some or even most people don’t know they have it. How can you stop what you can’t see, and even when you can detect it, it’s a week too late?

One promising approach is to isolate those people who’ve been in contact with known cases during the stealth contagion period. To do this is essentially to keep a diary of everyone you’ve been in contact with for the last week or two, and then if you eventually test positive for COVID-19, alert them all so that they can keep from infecting others even before they test positive: track and trace. Doctors can do this by interviewing patients who test positive (this is the “contact tracing” we’ve been hearing so much about), but memory is imperfect. Enter a technological solution.

Proximity Tracing By Bluetooth LE

The system that Apple and Google are rolling out aims to allow people to see if they’ve come into contact with others who carry COVID-19, while respecting their privacy as much as possible. It’s broadly similar to what was suggested by a team at MIT lead by Ron Rivest, a group of European academics, and Singapore’s open-source BlueTrace system. The core idea is that each phone emits a pseudo-random number using Bluetooth LE, which has a short range. Other nearby phones hear the beacons and remember them. When a cellphone’s owner is diagnosed with COVID-19, the numbers that person has beaconed out are then released, and your phone can see if it has them stored in its database, suggesting that you’ve had potential exposure, and should maybe get tested.

Notably, and in contrast to how tracking was handled in South Korea or Israel, no geolocation data is necessary to tell who has been close to whom. And the random numbers change frequently enough that they can’t be used to track your phone throughout the day, but they’re generated by a single daily key that can be used to link them together once you test positive.

(In Singapore, the government’s health ministry also received a copy of everyone’s received beacons, and could do follow-up testing in person. While this is doubtless very effective, due to HIPAA privacy rules in the US, and similar patient privacy laws in Europe, this centralized approach is probably not legal. We’re not lawyers.)

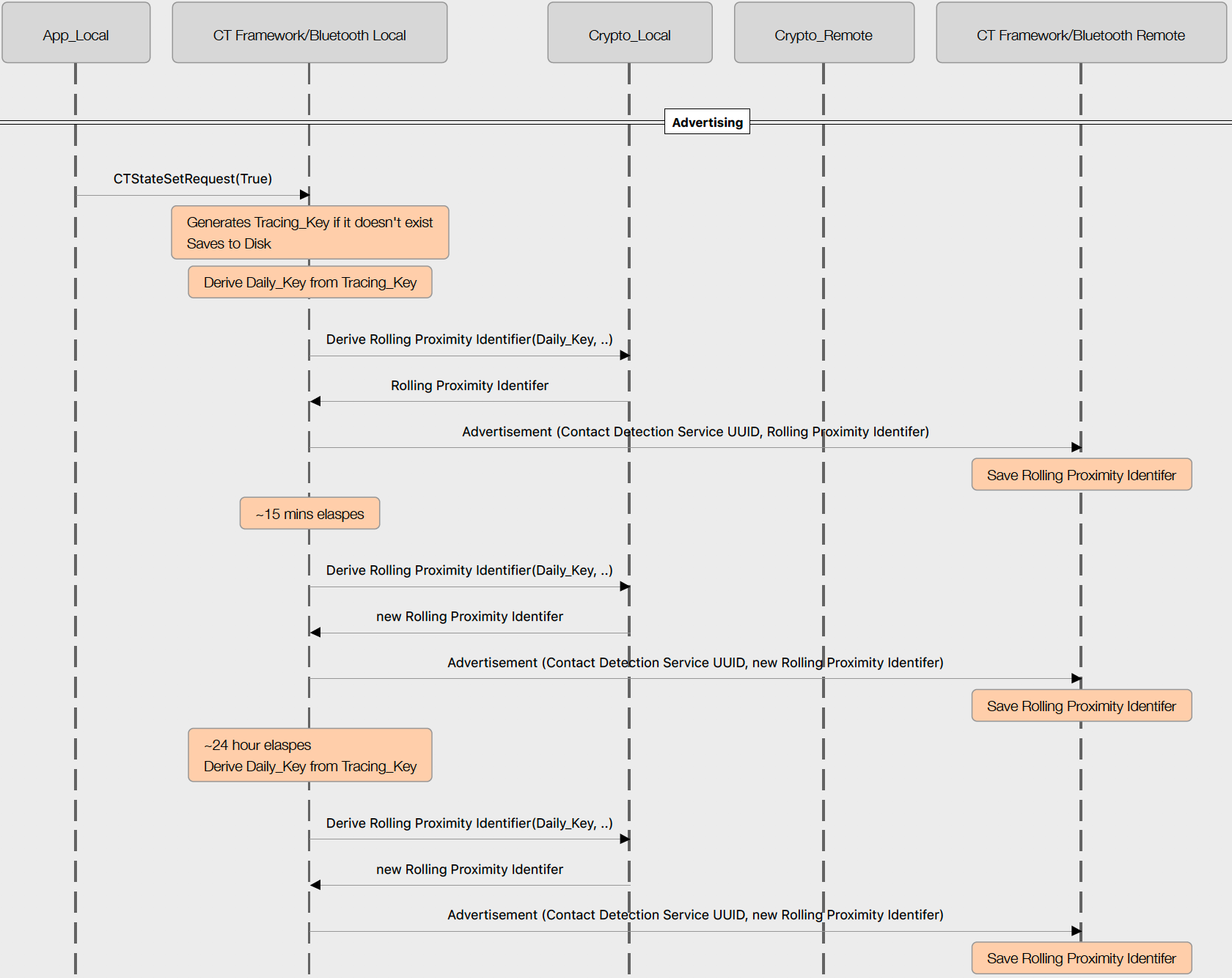

The Crypto

In the Apple/Google crypto scheme (PDF), your phone generates a secret “tracing key” that’s specific to your device once. It then uses that secret key to generate a “daily tracing key” every day, subsequently generating a “rolling proximity identifier” from the daily key every 15 minutes. Each more frequent key is generated from its parent by hashing with a timestamp, making it nearly impossible to go up the chain, deducing your daily key from the proximity identifiers that are beaconed out, for instance, but everything can be easily verified in the downstream direction. The 32 byte tracing key is big enough that collisions are extremely unlikely, and the individual-specific tracing key need never be revealed.

In the Apple/Google crypto scheme (PDF), your phone generates a secret “tracing key” that’s specific to your device once. It then uses that secret key to generate a “daily tracing key” every day, subsequently generating a “rolling proximity identifier” from the daily key every 15 minutes. Each more frequent key is generated from its parent by hashing with a timestamp, making it nearly impossible to go up the chain, deducing your daily key from the proximity identifiers that are beaconed out, for instance, but everything can be easily verified in the downstream direction. The 32 byte tracing key is big enough that collisions are extremely unlikely, and the individual-specific tracing key need never be revealed.

If you test positive, your daily keys for the time window that you were contagious are uploaded to a “Diagnosis Server”, which then sends these daily keys around to all participating phones, allowing each device to check the beacons that it received against the list of contagious people-days that it gets from the server. None of your exposure data needs to leave your phone, ever.

The diagnosis server uses the daily keys of contagious individuals so that your phone will be able to distinguish between a single short contact, where only one identifier from a certain daily key was seen, and a longer, more dangerous contact, where multiple 15-minute identifiers were seen from the same infected daily key, even though your phone can’t otherwise link the rolling proximity identifiers together over time. And because the daily keys are derived from your secret tracing key in a one-way manner, the server doesn’t need to know anything about your identity if you’re infected.

The ACLU produced a whitepaper that predates the proposed plan, but covers what they’d like to see addressed on the privacy and security fronts, and viewed in that light, the current proposition scores quite well. That it lines up with other privacy preserving “contact tracing” proposals is also reassuring.

What Could Possibly Go Wrong?

The most obvious worry about this system is that it will generate tons of false positives. A Bluetooth signal can penetrate walls that viruses can’t. We imagine an elevator trip up a high-rise apartment building “infecting” everyone who lives within radio range of the shaft. At our local grocery store, the cashiers are fairly safely situated behind a large acrylic shield, but Bluetooth will go right through it. The good news is that these chance encounters are probably short, and a system could treat these single episodes as a low-exposure risk in order to avoid overwhelming users with false alarms. And if people are required to test positive before their daily keys are uploaded, rather than the phones doing something automatically, it prevents chain reactions.

But if the system throws out all of the short contacts, it also misses that guy with a fever who has just sneezed while walking past you in the toilet paper aisle. In addition to false positives, there will be non-detection of true positives. Bluetooth signal range is probably a decent proxy for actual exposure, but it’s imperfect. Bluetooth doesn’t know if you’ve washed your hands.

But if the system throws out all of the short contacts, it also misses that guy with a fever who has just sneezed while walking past you in the toilet paper aisle. In addition to false positives, there will be non-detection of true positives. Bluetooth signal range is probably a decent proxy for actual exposure, but it’s imperfect. Bluetooth doesn’t know if you’ve washed your hands.

The cascaded hash of secret keys makes it pretty hard to fake the system out. Imagine that you wanted to fool us into thinking that we had been exposed. You might be able to overhear some of the same beacons that we do, but you’ll be hard-pressed to derive a daily key that will, at that exact time, produce the rolling identifier that we received, thanks to the one-way hashing.

Mayhem, Key Loss, and It’s Only an API

What we can’t see, however, is what prevents a malicious individual from claiming they have tested positive for COVID-19 when they haven’t. If reporting to the diagnosis servers is not somehow audited, it might not take all that many antisocial individuals to create an illusory outbreak by walking a phone around in public and then reporting it. Or doing the same with a programmable Bluetooth LE device. Will access to the diagnosis server be restricted to doctors? Who? How? What happens when a rogue gets the credentials?

The presence of a secret tracing key, even if it is supposed to reside only on your phone, is a huge security and privacy risk. It is the keys to the castle. A cellphone is probably the least secure computing device that you own — apps that leak data about you abound, whether intentionally malicious or created by corporations that simply want to know you better. If we’re lucky, this tracing key is stored encrypted on the phone, at least making it harder to get at. But it can’t be stored safely (read: hashed) because it needs to be directly available to the system to generate the daily keys.

The presence of a secret tracing key, even if it is supposed to reside only on your phone, is a huge security and privacy risk. It is the keys to the castle. A cellphone is probably the least secure computing device that you own — apps that leak data about you abound, whether intentionally malicious or created by corporations that simply want to know you better. If we’re lucky, this tracing key is stored encrypted on the phone, at least making it harder to get at. But it can’t be stored safely (read: hashed) because it needs to be directly available to the system to generate the daily keys.

Will diagnosis keys be revocable? What happens when a COVID test yields a false positive? You’d certainly want to be able to un-warn all of the people in the system. Or do we require two subsequent positive tests before registering with the server? And how long is a person contagious after being tested? We hope that a diagnosed individual would stay confined at home, but we’re not naive enough to believe that it always happens. When does reporting of daily keys stop? Who stops it?

Which brings us to the apps. What Apple and Google are proposing is only an application programming interface (API) to be eventually replaced with an OS-level service. This infrastructure only enables third-parties to create their Bluetooth-tracker applications, the diagnosis servers, and all the rest of the infrastructure. The work there remains TBD. These will naturally have to be written by trusted parties, probably national health agencies. Even if this is a firm foundation, trust will have to be placed higher up along the chain as well. For instance, it says in the spec that “the (diagnosis) server must not retain metadata from clients”, and we’ll have to trust them to be doing that right, or else the promise of anonymity is in danger.

We’re nearly certain that malign COVID-19 apps will also be written to take advantage of naive users — see the current rash of coronavirus-related phishing for proof.

Will it Work?

This is uncharted territory, and we’re not really sure that this large-scale tracing effort will even work. Something like this did work in Singapore, but there are many confounding factors. For one, the Singapore system reported contacts directly to the Health Ministry, and an actual person would follow up on your case thereafter. The raw man- and woman-power mobilized in the containment effort should not be underestimated. Secondly, Singapore also suffered through SARS and mobilized amazingly effectively against the avian flu in 2005. They had practiced for pandemics, caught the COVID-19 outbreak early, and got ahead of the disease in a way that’s no longer an option in Europe or the US. All of this is independent of Bluetooth tracking.

But as the disease lingers on, and the acute danger of overwhelmed hospitals wanes, there will be a time when precautions that are less drastic than self-imposed quarantine become reasonable again. Perhaps then a phone app will be just the ticket. If so, it might be good to be prepared.

Will it work? Firstly, and this is entirely independent of servers or hashing algorithms, if COVID-19 tests aren’t widely and easily available, it’s all moot. If nobody gets tested, the system won’t be able to warn people, and they in turn won’t get tested. The system absolutely hangs on the ability for at-risk participants to get tested, and ironically proper testing and medical care may render the system irrelevant.

Will it work? Firstly, and this is entirely independent of servers or hashing algorithms, if COVID-19 tests aren’t widely and easily available, it’s all moot. If nobody gets tested, the system won’t be able to warn people, and they in turn won’t get tested. The system absolutely hangs on the ability for at-risk participants to get tested, and ironically proper testing and medical care may render the system irrelevant.

Human nature will play against us here, too. If someone gets a warning for a few days in a row, but doesn’t feel sick, they’ll disregard the flashing red warning signal, walking around for a week in disease-spreading denial. Singapore’s system, where a human caseworker comes and gets you, is worlds apart in this respect.

Finally, adoption is the elephant in the room. The apps have yet to be written, but they will be voluntary, and can’t help if nobody uses them. But on the other side of the coin, if contact tracing does get widely used, it will become more effective, which will draw more participants in a virtuous cycle.

The Devil is in the Details

The framework of the Apple/Google system seems fairly solid, and most of the remaining caveats look like they’re buried in the implementation. The tradeoff between false positives and missed cases is determined by how many and what kind of Bluetooth contacts the system reads as “contagious”. App and server security are TBD, but can in principle be handled properly. Assuming that all of the collateral resources, like easily available COVID-19 tests, can be brought into play, all that’s left is the hard part. How can we make sure it goes as well as possible?

The Electronic Frontier Foundation came out with a report just after the Apple/Google framework was released, and while it gives a seal of approval to the basics, it also has a laundry list of safeguards for any applications that are developed on that framework. Consent, minimization of data collection, information security, transparency, absence of bias, and the ability to expire the data once the crisis is over are their guidelines. These also line up with the recommendations of the German Chaos Computer Club as well, who additionally push for open-source code to allow the code to be audited by those who have concerns about how their data is being handled.

The Electronic Frontier Foundation came out with a report just after the Apple/Google framework was released, and while it gives a seal of approval to the basics, it also has a laundry list of safeguards for any applications that are developed on that framework. Consent, minimization of data collection, information security, transparency, absence of bias, and the ability to expire the data once the crisis is over are their guidelines. These also line up with the recommendations of the German Chaos Computer Club as well, who additionally push for open-source code to allow the code to be audited by those who have concerns about how their data is being handled.

It’s heartening to see many of the privacy concerns addressed by Google and Apple as well, but we’d like to hear some discussion about what their criterion are for shutting the whole thing down when it’s all over. A global pandemic is perhaps a good time to doff your tinfoil hat in service of the greater good, but we do not want ubiquitous contact tracing to become the new normal.

While some might dismiss privacy concerns and software details as secondary to getting us through the COVID-19 pandemic, the success or failure of this project lies in whether or not people opt in, get tested when they should, and behave appropriately when they show up positive. People will only opt in if they trust the system, and they will only get tested if that’s made as easy as possible. Only one of these two can be solved with software.